Lumi is a gesture-controlled smart mirror that was built as a senior capstone project. The smart mirror is designed to display easily glanceable information to the user. We imagined a common use case in the morning where a user can quickly glance at the day ahead, catch up on morning news, and even take a quick selfie. This project was done in collaboration with three other colleagues who are acknowledged below. My contributions included hardware design and fabrication, gesture recongition software, user experience design, and feature engineering.

The mirror display is a 34" 2K high-resolution monitor oriented 90-degrees with a two-way acrylic mirror affixed in front. Mounted to the back of the monitor is a computer running custom software we designed and built for this project. For gesture input, we used an Xbox Kinect sensor placed below the display.

Fortunately, the Xbox Kinect sensor has a motorized tilting mechanism that allowed us to change the angle of the sensor depending on the user's height for optimal gesture capturing. A set of external speakers were also mounted behind the display to serve as audio output for streaming music and playing videos. During our initial researech for this project, we noticed that many existing DIY smart mirrors either used a touchscreen input or simply didn't have any user input. We felt that being able to directly interact with the smart mirror was crucial to creating an IoT device that was actually feature rich and useful. However, two main factors dissuaded us from using a touchscreen input: (1) intentionally putting fingerprints on a mirror is never desirable, and (2) a touchscreen at a mirror size would be prohibitively expensive for us. Given the relative ubiquity of Xbox Kinect sensors and its open-source capture libraries, it was an obvious choice to use gesture controls.

Given that this was a CS capstone project, the software that powered our mirror display was a crucial component. Our vision for the mirror was to have independent glanceable widgets that would show content and notifications along the left and right columns. These widgets included services like the clock, weather, email, Twitter, stocks, live NPR news streams, and a rotating gallery of photos. The widgets' position and settings can be configured from an easy-to-use web dashboard. In addition to glanceable widgets, we also wanted to create apps that provided more functionality to the mirror. For instance, we took advantage of the mirror by creating an app that can play makeup tutorials from YouTube so a user could easily follow a video tutorial while looking at themselves in the mirror. Other apps we built included a toothbrushing timer and a selfie camera. Given more time, we could easily envision a suite of other apps being built including clothing try-ons, games, and much more.

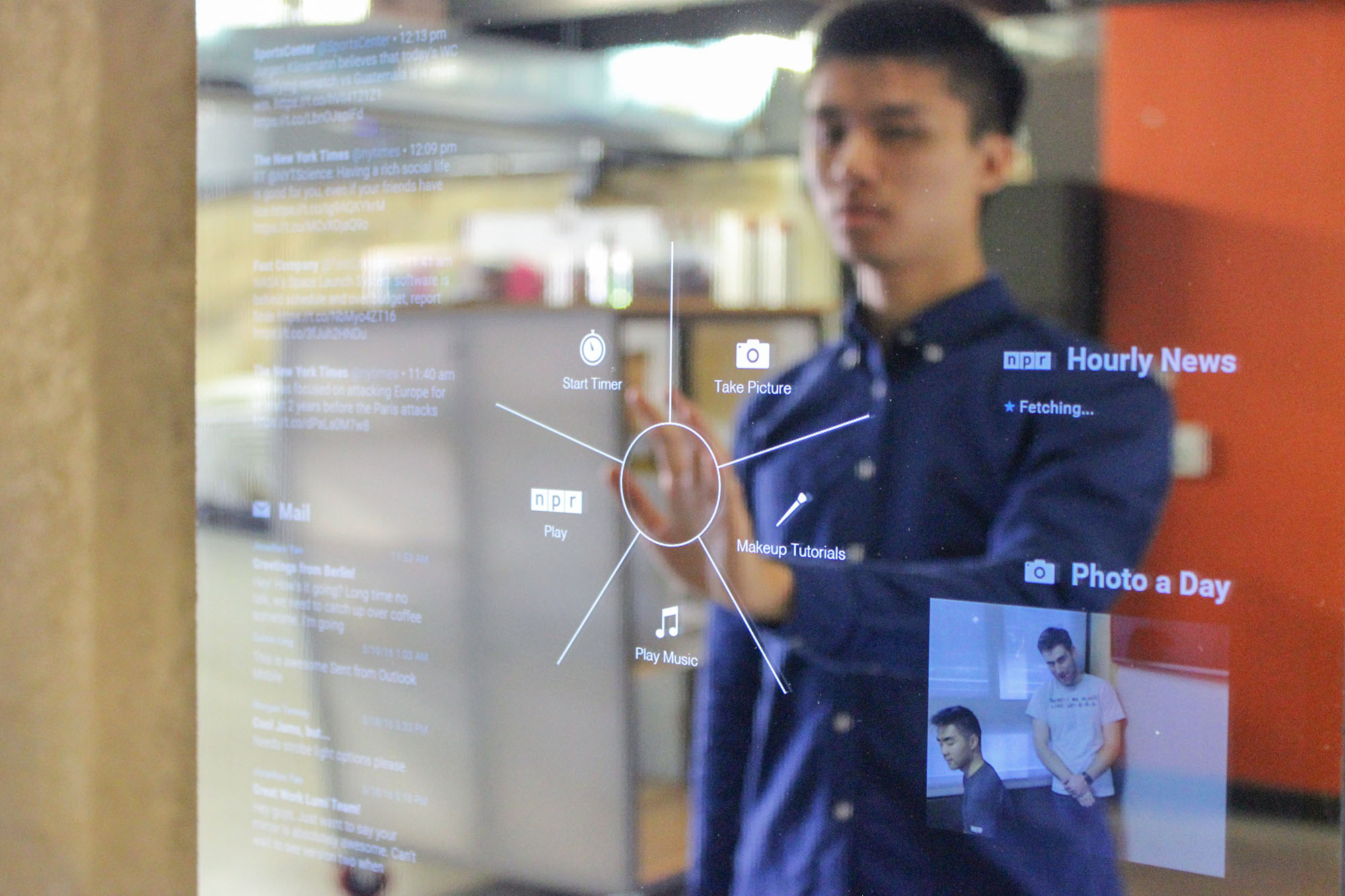

Throughout our design process, we iterated on many interaction and input methods. We ultimately came up with our radial menu design (shown below) which we believed is an intuitive input method. To use the menu, the user simply raises their arm in front of them and the menu appears with options surrounding the menu. To select an option, the user only needs to move and hold their arm slightly in the direction of the desired option and hold. After holding for three seconds, the option is selected. The menu options presented change depending on the context; for instance, when playing a video, the menu presents options like play/pause, skip, and back. When designing this input method, we wanted to ensure that gesture controls were as simple as possible. By minimizing finicky movements, such as using fingers, we created a gesture system that was very forgiving yet accurate and fluid.

Because many of the features we wanted to build for this mirror were web-enabled services, we used Node.js as the primary host framework. The display simply runs a fullscreen web browser that loads a webpage connected to the local Node.js server. Within the server, we built frameworks and models for supporting widgets and apps as well as configuration interfaces. We also created a custom server/client communication protocol that was particuarly useful for handling data streams from the Xbox Kinect. For gesture recongition, we built a separate Python server that took input from the Xbox Kinect sensor and streamed the input data to the Node.js server.

This project was done in collaboration with Dan Guo, Sloane Sturzenegger, and Jonathan Yan (pictured above). This project was awarded Star of Show, the only recognition given in the capstone project course.